I haven’t updated my blog..

Lots has changed in the lab:

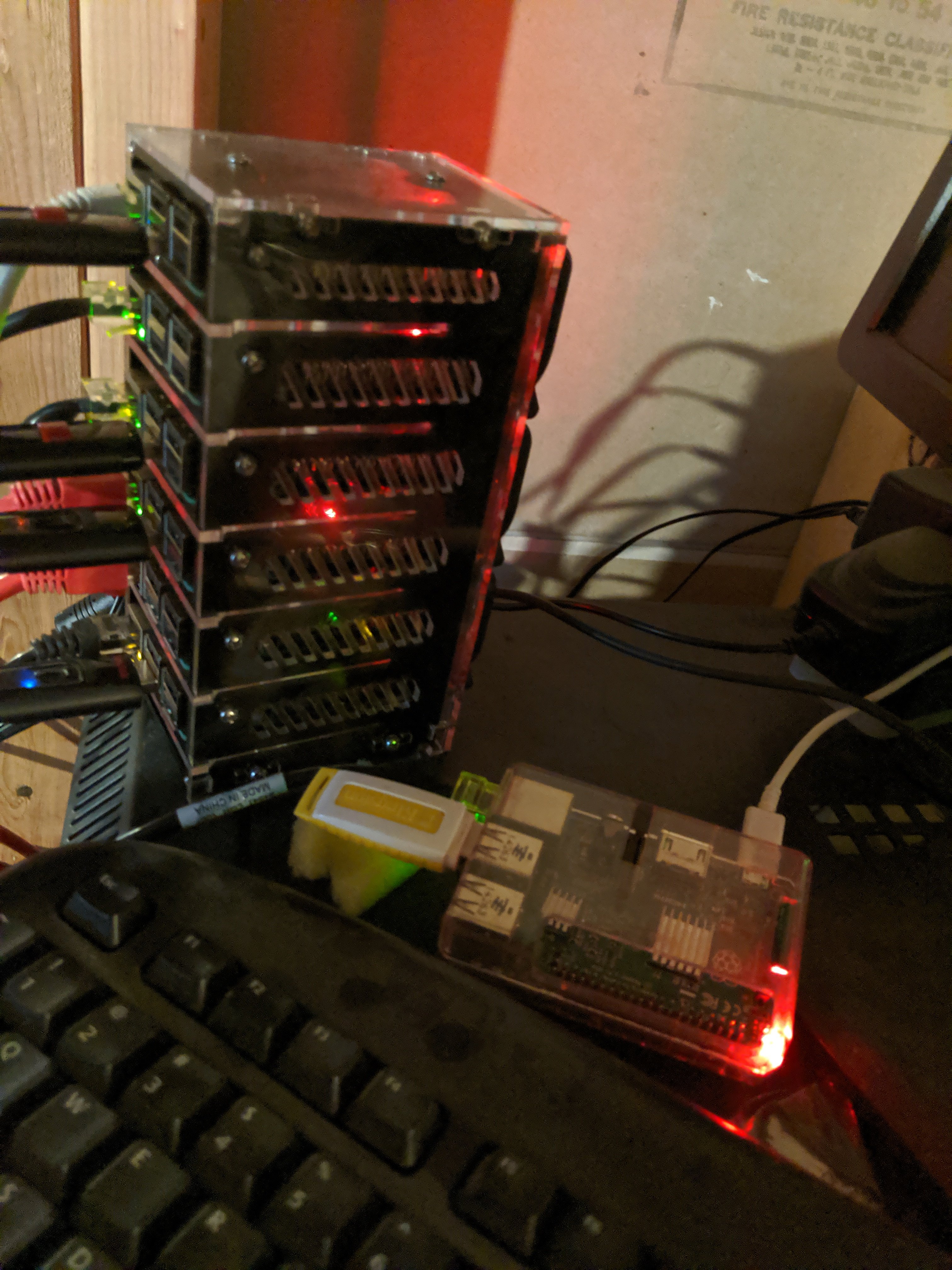

Now running a cluster of 4-5 Proxmox Servers to play with HA and CEPH, both have been truly eye opening in the way of maintaining 0.99x up time. With this combination I can update them, set one to reboot, watch as it shunts all its HA VMs/CTs to other systems, reboots and takes them back. no more services down, no more wife/kids screaming or waiting until the dead of night for updates.

A note before I continue, these “Servers” the only real server grade is the one I built in Lab runneth over, its been upgraded over the years, Dual Intel(R) Xeon(R) CPU E5-2667 v2 and now 256GB of RAM. The rest are used/old hand me downs and a repurposed gaming PC I built. You don’t need the best to learn in a home lab.

The cluster also came with a need for 10GB networking, I beta tested the cluster before making the switch to live and the biggest problem I ran into (also the internet long had told me it would be an issue but again beta) was running CEPH over 1GB. Before bringing it live I bought them all 10GB SFP+ nics and a new eight port SFP+ switch.

New switch brought about a completely network design overhaul, Originally it was:

WAN>(1GB E)Router>(10GB SFP+)24/2P Switch>Everything

And I could have added the new 8 Port SFP+ after the 24/2P as it had a spare SFP+ port and daisy chained it.

Instead I split them:

WAN>(1GB E)Router>(10GB SFP+,VLANs 1 and 2)24/2P Switch

WAN>(1GB E)Router>(10GB SFP+,VLANs 3 and 99)8P SFP+ (Also self containted VLANs 10 and 11*)

Then both switches are linked and pass their respected VLAN’s (*other than 10 and 11) between them.

*The new switch brought about new fun, the beta ran CEPH and Proxmox’s Cluster traffic over my default “Lan” network, Yeah not what I wanted and I also didn’t want the Firewall/IDS/IPS anywhere near that traffic to cause any latency after the 1GB test, so they both got their own VLANs that only traverse the SFP+ switch.

Tied AD to Authentik, originally for giggles after a random youtube video, now everything that can uses it and while having a single SSO solution by its self was awesome, the real magic came when I figure out MFA then shortly after how to use my Yubikey. Now logging into anything is simply: Punch Yubikey pin, touch Yubikey, Done.

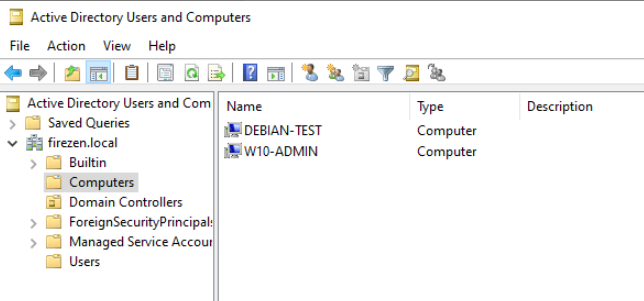

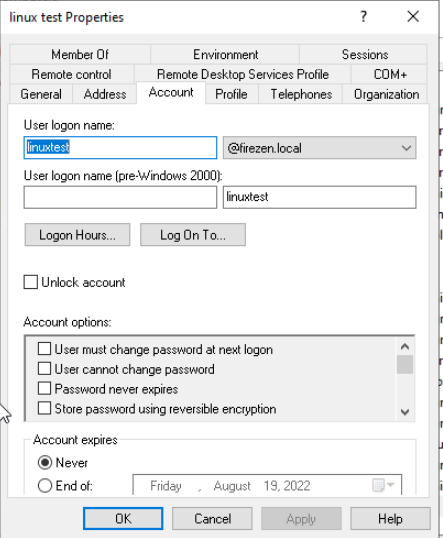

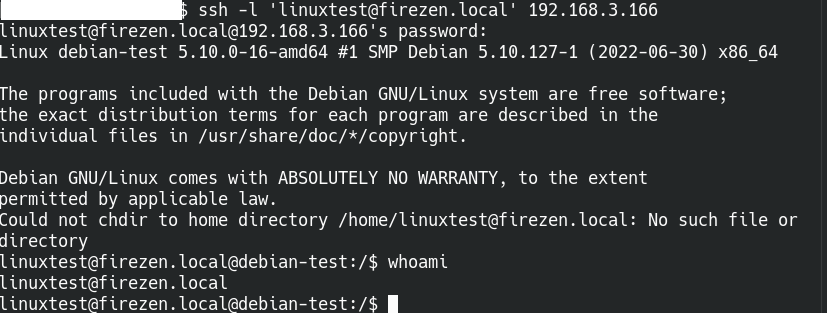

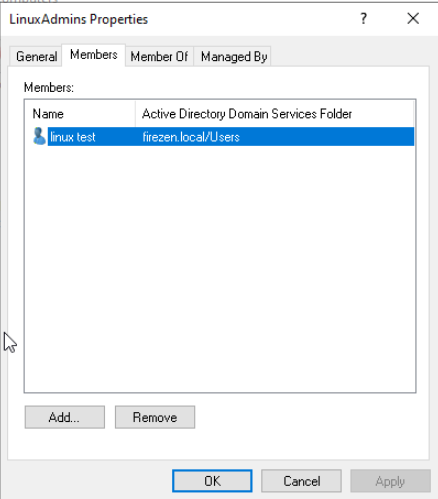

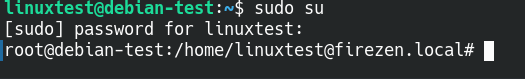

Also a check in from one of other posts where I got Linux tied to AD: Another from the It Bucket list, using a combo of that and the above, I have a Debian box tied to AD that I (and a friend who also toys in my lab) can SSO into.

Tried out various other projects that I’ll give short blurbs about:

Immich: I like it but it needs a way to stay synced with my Google photos until I’m ready to actually make the switch.

Graphene (Android Rom): Ran it for a month, had Work and Google profiles separated from the “default” got exasperated with the constant profile switching, went back to stock.

Bunch of random AI/LLM stuff: Things raining from starting with following a tutorial on getting Openwebui started to falling down the rabbit hole of writing python scripts to make an AI sort my mailbox, also N8N.

I know I’m probably missing things, its been a long time since I posted.

Thats it for now, Thanks for reading.

FireZen